Making Sense of the Modern Enterprise Data Challenge

Today’s organizations face an unprecedented data management crisis. According to recent studies, enterprises use an average of 976 different applications, with critical business data scattered across legacy systems, cloud platforms, and hybrid infrastructures. This fragmentation creates several costly problems.

The Hidden Costs of Data Silos

Slow Decision-Making

Teams wait weeks for data access requests

Duplicate Efforts

Multiple departments recreate the same data processes

Compliance Risks

Inconsistent governance across data sources

Limited AI Potential

Machine learning models and AI agents lack comprehensive data access

A unified data management approach changes this equation entirely. Rather than forcing data into rigid warehouses or complex ETL pipelines, modern data fabric architecture creates an intelligent integration layer that connects directly to existing data sources, enabling immediate access while maintaining security and governance standards.

Data Fabric Architecture: Definition and Core Concepts

What is a Data Fabric? A data fabric is an enterprise data architecture that creates a unified, intelligent layer for accessing and managing information across your entire technology ecosystem. Unlike traditional data integration platforms that require moving or copying data, a data fabric establishes direct connections to source systems, enabling real-time data virtualization and seamless cross-platform analytics.

How Data Fabric Architecture Works

Think of a data fabric as the “nervous system” of your data infrastructure. Just as your nervous system connects different parts of your body and enables instant communication, a data fabric connects disparate data sources and enables instant information flow throughout your organization.

Key Architectural Principles

Data-in-Place Strategy

Access data where it lives without migration

Intelligent Automation

AI-powered data discovery and cataloging

Universal Governance

Consistent policies across all data sources

Real-Time Processing

Live data access to any source

This architectural approach transforms fragmented data landscapes into cohesive, governed ecosystems where insights flow freely and business decisions accelerate.

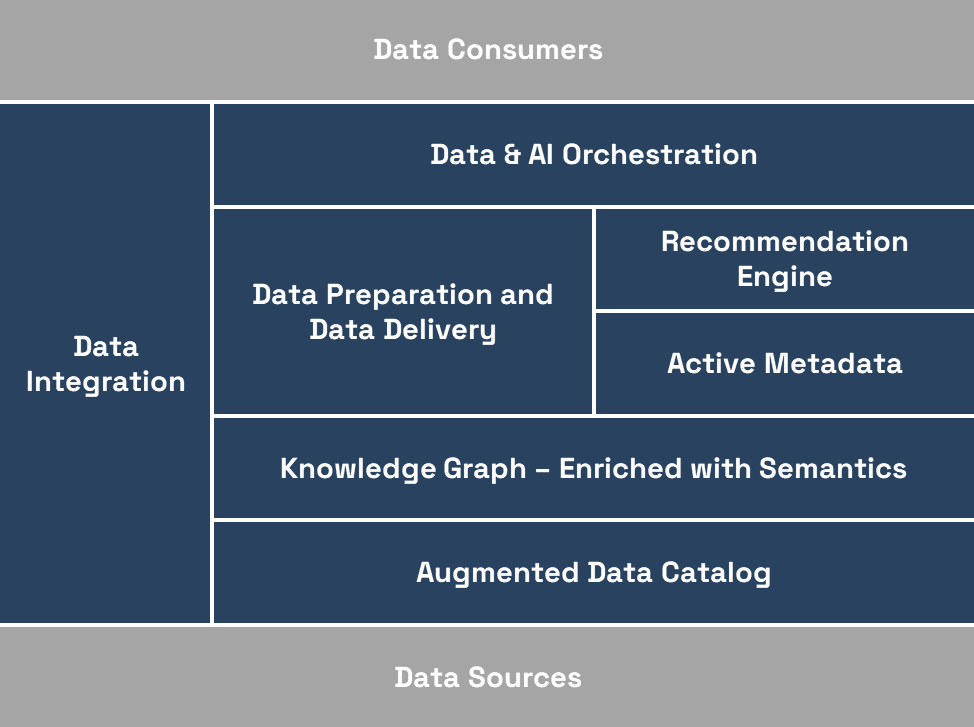

7 Essential Components of Data Fabric Architecture

Modern data fabric architecture is built on seven key components, each playing a unique role in creating a seamless, governed data environment. Here’s how each one contributes:

Augmented Data Catalog

Function: Organizes, classifies, and enriches metadata to make data assets easy to find and understand

Business Value: Empowers teams to find relevant data without technical barriers

Real-World Impact: Speeds up data discovery and improves user productivity

Knowledge Graph

Function: Maps relationships between data points, providing deep context and meaning

Business Value: Simplifies data discovery and reveals hidden patterns

Real-World Impact: Improves data exploration and uncovers new opportunities

Data Integration

Function: Connects to all data sources—structured, semi-structured, and unstructured—across cloud, on-premises, and hybrid environments

Business Value: Eliminates the need for data duplication or heavy ETL pipelines

Real-World Impact: Accelerates data access and simplifies integration for better decision-making

Active Metadata

Function: Continuously updates based on real-time data usage and system changes

Business Value: Improves data quality, trust, and governance automatically

Real-World Impact: Enables proactive data management and enhances trust in data

Recommendation Engine

Function: Uses AI to suggest relevant datasets, combinations, or actions

Business Value: Accelerates analysis and unlocks new insights

Real-World Impact: Guides users to better data and insights faster

Data Preparation & Delivery

Function: Cleanses, enriches, and formats data on the fly to ensure it’s ready for analysis

Business Value: Reduces manual data prep work and speeds up insight generation

Real-World Impact: Enables more agile decision-making and improves operational efficiency

Data & AI Orchestration

Function: Coordinates data workflows, analytics pipelines, and AI processes to ensure smooth delivery and use

Business Value: Speeds up time-to-insight and scales AI initiatives

Real-World Impact: Delivers reliable, consistent insights across the organization

5 Key Business Benefits of Data Fabric Implementation

Implementing data fabric architecture delivers measurable improvements across multiple business dimensions:

Real-Time Business Intelligence & Analytics

Access and analyze data in near real-time, enabling immediate responses to market changes, operational issues, or customer behaviors. Organizations typically see 40-60% faster decision-making cycles.

Complete Data Silo Elimination

Create a single, comprehensive view of your business by connecting previously isolated systems. This unified perspective improves cross-departmental collaboration and reveals hidden business opportunities.

Enterprise-Grade Data Governance & Compliance

Apply consistent security policies, access controls, and regulatory compliance measures across all data sources simultaneously. Reduce compliance audit preparation time by up to 75%.

Self-Service Data Access for All Users

Empower business analysts, data scientists, and executive teams to find and use data independently, without IT bottlenecks. Reduce data request backlogs by 80-90%.

Accelerated AI & Machine Learning Innovation

Provide AI models with comprehensive, high-quality training data from across your organization. Organizations report 3-5x faster ML model development and deployment cycles.

Data Fabric vs. Alternative Data Management Approaches

Understanding how data fabric compares to other enterprise data solutions helps clarify when this architecture provides the most value.

|

Comparison |

Traditional Approach | Data Fabric Advantage |

Data Fabric vs. Traditional Data Platforms, Data Warehouses & Data Lakes |

Data warehouses and data lakes rely on moving and duplicating data into centralized repositories, leading to high infrastructure costs, slower access to insights, and limited flexibility to adapt to changing data needs. | A data fabric connects directly to data where it lives, eliminating the need for costly data duplication and providing real-time access to insights. This reduces infrastructure costs by 30-50% and enables faster, more agile decision-making. Unlike traditional platforms that create closed ecosystems and lock data into rigid silos, a data fabric is built on an open, interoperable foundation—seamlessly integrating with your existing tools and future-proofing your data strategy. |

Data Fabric vs. ETL/ELT Data Integration Platforms |

ETL and ELT pipelines move and transform data in batches, introducing delays and requiring constant updates and maintenance. These processes can be rigid and resource-intensive, limiting agility and making it harder to adapt to new business needs. | Data fabric eliminates the bottlenecks of batch-based data integration by providing real-time data virtualization, dynamic data preparation, and continuous governance—all without physically moving data. It not only streamlines access but also enriches data with context, ensuring it’s trusted, secure, and ready for any analytics or AI initiative. By reducing integration maintenance overhead by up to 70%, data fabric frees data teams to focus on driving insights, not maintaining pipelines. |

Data Fabric vs Data Virtualization Solutions |

Data virtualization platforms provide live access to data across systems without moving it—but often stop there. They typically lack built-in governance, active metadata, and advanced context, leaving data quality and trust gaps that limit enterprise-wide adoption. | Data fabric extends beyond access by weaving in governance, security, and active metadata management. It not only connects live data sources but also ensures data is contextualized, high-quality, and compliant. With AI-powered recommendations and intelligent data orchestration, data fabric transforms data access into a fully governed, insight-ready environment that supports real-time analytics, self-service, and advanced AI. |

Data Fabric vs Data Mesh |

Focused on decentralizing data ownership to domain teams, enabling them to manage their own data as products. It’s an organizational and cultural approach that empowers domain-driven analytics but often lacks a unified technical layer for consistent access, governance, and security. |

Data Fabric provides the underlying technical foundation to support data mesh—offering a consistent, real-time data access layer, robust governance, and automated integration across all data sources. This complementarity enables organizations to implement data mesh at scale while maintaining trust and compliance. |

Data Fabric Use Cases Across Industries

Real-world implementations demonstrate data fabric’s versatility across different business scenarios:

Financial Services

Risk Management & Compliance

Challenge: Banks need real-time access to transaction data, customer profiles, and regulatory information across multiple systems

Solution: Data fabric connects core banking systems, trading platforms, and external data sources

Results: 50% faster fraud detection, real-time regulatory reporting, improved customer risk assessment

Healthcare

Patient Care & Research

Challenge: Medical data exists in electronic health records, imaging systems, laboratory databases, and wearable devices

Solution: Data fabric creates unified patient views while maintaining HIPAA compliance

Results: Reduced diagnosis time, improved treatment outcomes, accelerated clinical research

Manufacturing

Supply Chain Optimization

Challenge: Production data, supplier information, and logistics tracking exist in separate systems

Solution: Data fabric enables real-time supply chain visibility and predictive maintenance

Results: 25% reduction in inventory costs, 40% improvement in equipment uptime

Retail

Customer Experience & Inventory Management

Challenge: Customer data spans e-commerce platforms, point-of-sale systems, marketing tools, and mobile apps

Solution: Data fabric creates 360-degree customer profiles and real-time inventory tracking

Results: 30% increase in customer satisfaction, 20% reduction in stockouts

Data Fabric Implementation: Build vs. Buy Decision Framework

One of the most critical decisions organizations face when adopting data fabric architecture is whether to build a custom solution in-house or purchase a turnkey platform. This choice significantly impacts timeline, costs, and ultimate success. Here’s a comprehensive analysis to guide your decision.

The Hidden Challenges of Building Data Fabric In-House

While custom development might seem appealing for control and customization, organizations consistently underestimate the complexity and resource requirements involved:

Significant Upfront Investment Without Guaranteed ROI

Building enterprise data fabric requires substantial capital for infrastructure, specialized talent acquisition, and ongoing operational costs. Organizations typically invest $2-5 million before seeing any business value, with no certainty the solution will meet performance expectations.

Extended Development Timelines Delay Business Value

Custom data fabric projects typically require 18-36 months from conception to production deployment. During this extended timeline, business opportunities are missed, competitive advantages erode, and stakeholder confidence diminishes.

Complex Integration Expertise Requirements

Successfully connecting distributed data sources across cloud, on-premises, and hybrid environments requires deep expertise in multiple database technologies, cloud platform APIs, data virtualization, legacy system connectivity that most organizations lack internally and must be hired.

Scalability Limitations and Performance Bottlenecks

Custom-built solutions often struggle with enterprise-scale requirements, such as:

- Exponential data volume growth

- Concurrent user load

- Geographic distribution creates latency and reliability issues

- Adapting to new data sources and formats requires constant redevelopment

Missing AI-Ready Data Context and Intelligence

In-house solutions typically lack sophisticated metadata management and automated data enrichment capabilities essential for modern AI and machine learning initiatives. Organizations spend additional months building these capabilities separately.

The Strategic Advantages of Turnkey Data Fabric Solutions

Purchasing a purpose-built data fabric platform eliminates development risks while accelerating time-to-value:

Immediate Time-to-Value with Proven Architecture

Deploy a fully operational data fabric within days or weeks—not years—using proven architectures, pre-built connectors, and best practice governance frameworks.

Seamless Enterprise Integration

Eliminate complex integration projects with universal connectivity, API-first architecture, and real-time data synchronization across cloud, on-prem, and hybrid environments.

Enterprise-Grade AI Readiness

Access advanced AI and ML capabilities from day one, including automated metadata enrichment, intelligent recommendations, and built-in MLOps integrations.

Vendor-Managed Maintenance & Innovation

Focus on business outcomes while the vendor handles automatic updates, 24/7 monitoring, seamless scalability, and continuous innovation.

Build vs. Buy: Decision Matrix

| Evaluation Criteria | Build In-House | Buy Turnkey Platform |

| Time to Production | 18-36 months | 2-8 weeks |

| Initial Investment | $2-5M+ (plus ongoing costs) | $200K-800K annually |

| Technical Risk | High (unproven architecture) | Low (battle-tested platform) |

| Integration Complexity | Requires specialized team | Pre-built connectors included |

| AI/ML Readiness | Months of additional development | Built-in from day one |

| Scalability Assurance | Unknown until tested at scale | Proven across enterprise deployments |

| Ongoing Maintenance | Full internal responsibility | Vendor-managed |

| Feature Innovation | Limited by internal resources | Continuous vendor-driven updates |

| Regulatory Compliance | Custom implementation required | Enterprise-grade controls included |

Comprehensive FAQ: Data Fabric Implementation

No—data fabric’s core value proposition is “data-in-place” access. The platform connects to your existing databases, cloud storage, and applications without requiring data movement or duplication. This approach reduces implementation time by 60-80% compared to traditional data warehouse projects.

Modern data fabric platforms use intelligent caching, query optimization, and parallel processing to deliver performance that often exceeds direct database access. The unified query engine can optimize across multiple sources simultaneously, reducing overall response times.

Yes—data fabric platforms provide standard APIs, ODBC/JDBC connections, and native integrations with popular BI tools like Tableau, Power BI, Looker, and Qlik. Your existing dashboards and reports continue working without modification.

For organizations buying a turnkey data fabric platform, initial ROI happens very quickly with full return often achieved within a few months. Key drivers include faster analytics delivery (5-10x speed improvement), reduced data preparation time (up to 80% faster), and elimination of manual integration efforts. In contrast, building a data fabric in-house typically requires 12-18 months post-implementation just to reach full ROI, due to extended development and integration timelines.

Data fabric platforms include built-in governance capabilities that automatically enforce data privacy policies, access controls, and audit logging across all connected data sources. This centralized approach often improves compliance posture compared to managing policies across individual systems.

Data fabric architecture is designed for any organization that needs to manage complex, distributed data—whether you’re a large enterprise, a growing mid-sized company, or even a smaller team dealing with multiple data sources. It’s ideal for businesses that want to:

-

Simplify data complexity

-

Empower data teams to work faster and more efficiently

-

Enable AI and advanced analytics without relying on slow, manual processes

A data fabric’s open, flexible architecture adapts to new data sources, technologies, and analytics needs. It eliminates rigid pipelines and manual integrations, giving you an agile data foundation that grows with your business.

Data mesh is an organizational approach that decentralizes data ownership to domain teams, while data fabric provides the technical infrastructure for data access and governance. Many organizations successfully combine both: data mesh for organizational structure and data fabric for technical implementation.

While data virtualization focuses solely on real-time data access, data fabric goes further by weaving in governance, context, and intelligent recommendations. It’s a complete architecture for data access, management, and delivery.

Yes! Data fabric architecture is designed to connect and unify data from any environment—cloud, on-premises, or hybrid. It seamlessly integrates data across AWS, Azure, Google Cloud, and private clouds to deliver a consistent, real-time view.

Data fabric platforms natively support both streaming and batch data processing. They can ingest real-time event streams (from Kafka, Kinesis, etc.) while simultaneously accessing batch data sources, providing a unified interface for both access patterns.

In many cases, yes. Data fabric eliminates the need for traditional ETL by providing direct data access and real-time transformation capabilities. However, some organizations maintain hybrid approaches, using data fabric for real-time access and ETL for specific data warehouse loading requirements.

Active metadata dynamically captures how data is used, where it flows, and who is accessing it—in real time. In a data fabric, this metadata powers governance, trust, and intelligent recommendations, enabling smarter data usage and compliance.

A data fabric can support various types of data, including structured data from databases, semi-structured formats like JSON and XML, and other common data types. It’s designed to connect and integrate data in any format, wherever it resides in your organization.

Data Fabric Glossary: Key Terms & Concepts

A modern data architecture that creates a unified, intelligent layer across all your data sources—on-premises, cloud, and hybrid—enabling real-time data access, governance, and analytics without data duplication.

An approach that makes it easy for users to find, explore, and access data across all sources from a single location—eliminating data silos and accelerating insights.

Technology that provides unified access to data across multiple sources without physically moving or copying the data to a central location.

Centralized inventory of organizational data assets that includes metadata, lineage information, and business context to improve data discovery.

Dynamic metadata that continuously updates based on data usage patterns, quality metrics, and system interactions, enabling automated governance and optimization.

Visual representation of relationships between different data entities, enabling intelligent data discovery and contextual recommendations.

The process of connecting and combining data from multiple sources—whether cloud, on-premises, or hybrid—to create a unified view without duplicating or moving the data.

The automated coordination of data flows, analytics pipelines, and AI processes across systems to ensure smooth and efficient data delivery.

Isolated collections of data that aren’t easily accessible across teams or systems—often slowing down decision-making and collaboration.

A business-friendly layer in the data architecture that translates complex data models into intuitive terms for non-technical users—enhancing self-service analytics and AI.

Consistent application of data policies, security controls, and compliance measures across all data sources within an organization.

A record of the origin, movement, and transformation of data through systems and processes—crucial for data trust, governance, and compliance.

Transform Your Data Strategy: Next Steps

Data fabric architecture represents a fundamental shift from traditional data management approaches—moving from rigid, centralized systems to flexible, intelligent integration platforms that adapt to your business needs.

The organizations that implement data fabric successfully don’t just improve their technical capabilities; they transform how their teams make decisions, collaborate across departments, and respond to market opportunities. By connecting data where it lives and making it accessible to everyone who needs it, data fabric creates a competitive advantage that compounds over time.